It seems the Department for Education (DfE) is governing by a process of endless consultations as a smoke screen to hide underlying strategy. The latest on Post Qualification Admissions has already decided to cease teachers’ grade predictions as part of the university admission system and offers only two options in a Hobsons choice. Its purpose appears to be making the process fairer for less advantaged students but might make matters worse. Many well-informed observers are getting very concerned. The decision is predicated on two assumptions. Firstly, that teachers tend to under predict the grades for disadvantaged students who may go onto get higher grades and miss out on courses at university with higher entry requirements. Secondly, that the examination grades are an accurate reflection of a student’s ability and teachers are simply getting it wrong. Neither are strictly correct, and this post is a longer one as it examines some of the flaws in the decision making by the DfE, led by Education Secretary, Gavin Williamson. His track record with Ofqual and examinations last summer does not inspire confidence.

The mantra 'Education, Education, Education’ of the Blair years was in recognition of the importance of education in developing the economy and wellbeing of all UK citizens. It was first said the context of the leader’s speech at the labour Party Conference in October 1996.

“When the Tories talk about the spirit of enterprise they mean a few self-made millionaires…….. people have enterprise within them. They have talent and potential within them. Ask me my three main priorities for government and I tell you: education, education and education”

It resonated with many people and fuelled a mandate for increased spending on education that got him elected by a huge majority the following May.

Fast forward to the government of today. It seems ‘Consultation, Consultation, Consultation’ has become the alternate mantra of education and other policies.

There are numerous consultations completed, and ongoing, listed on the DFE Consultation Hub – Citizen Space. Essentially, they seek views on decisions already made. As a result, there may be some small changes made in the light of overwhelming opposition. This approach is intended to sugar coat the decisions and policies with a veneer of ‘democracy’ in action. The aim is to pick out some affirmation of policy, but does this lead to changed policies already in train? The answer is, unlikely.

The Post-Qualification Admissions Reform Consultation.

This is no exception in the way government policy is announced under the guise of ‘democracy’. The ‘consultation,’ ’Post-Qualification Admissions Reform Consultation - Department for Education - Citizen Space’, simply announced a decision by the Department for Education (DfE) and puts strict limits on the scope of the responses. This was designed to seek answers to predetermined questions relating to two options to replace the current university admissions system that relates to England (but will also apply in Wales and Northern Ireland). Changes to the examination assessments themselves or UCAS are not up for debate. Yet they are inextricably linked to the admissions process and must be considered as part of a legitimate review. The knock-on effect of the proposed changes demands this happens.

Option 1 proposes that candidates only apply to a university after their A-level, and presumably BTEC, results are announced and only then are candidates made offers and accepted.

Option 2 proposes that candidates apply to universities in advance of examination results, but all universities wait until the A-level and BTEC results are announced before candidates are made offers.

Currently, many students missing out on grades for a place are entered into ‘clearing’ whereby they consider other options if there are places available. The proposed consultation options essentially put all students into a ‘clearing’ process. It would seem to be a Hobsons choice of the least bad option.

Both options would mean examinations should brought forward to allow more time for marking and the admissions process to spring into action. TEFS (15th January 2021 ‘A radical overhaul of examinations is needed as soon as possible’) has proposed that university admissions examinations move to the year before and involve more assessments of potential from teachers alongside evidence from continuous assessments. This is what happens in Scotland and Ireland in systems that are tried and tested.

Why do this?

The main plank of the DfE’s argument lies in an apparent desire to make the system fairer for disadvantaged students. This relies on 'evidence' that teacher’s predicted grades are often ‘inaccurate’. These determine the initial offers made by universities to candidates. Predicted grades can be under or over predicted and are considered to the best possible outcome that a teacher expects a student to finally achieve. Later, some do better and some do worse in the examinations. This also means that a number of students apply for universities with lower entry requirements than they might have achieved later. Others are over ambitious, but the higher demanding university might take them with lower grades in any case. This latter practice could/may be abolished under a new regime.

In its preliminary equality analysis, the DfE relies a lot on a the work of Gill Wyness and colleagues going back to 2016 and 2015/15 UCAS data. This is detailed and excellent work that should be taken in its totality. Particular emphasis is also placed on analyses by Wyness and colleagues from December 2019 in ‘Mismatch in higher education: prevalence, drivers and outcomes’. This looks at the extent of ‘mismatches’ between students and universities that balances student attainment with varying university entry requirements. The so-called elite universities (such as the Russell group) demand much higher grades for courses than others on average. Meanwhile students predicted to achieve lower grades may get higher grades in the examinations but miss out and go to another institution. There they become ‘over matched’ in courses with grades higher than the average intake, and in course quality in terms of earnings potential over five years. Others are ‘under matched’ and have lower grades than their peers in the university they attend.

Predicting grades seen as the main problem.

The DfE sees the root of this mismatching problem residing in the predicted grade process that determines offers. Eliminate that and students are free to trade in their final grades for a University course place that is better matched to the grades attained.

However, Wyness points to other factors, including geographical distance from the university of choice and type of school attended. The 2019 report observed that “There are substantial socio-economic status (SES) and gender gaps in mismatch, with low SES students and women attending lower quality courses than their attainment might otherwise suggest”. Students who travel away from home do not exhibit a mismatch gap to a great extent. However, “for students who stay close to home, the SES gaps are striking”. The DfE ignore the crucial observation that socio economic factors are likely to be a bigger influence on ‘undermatching’. Students decide not to travel far from home to avoid expensive accommodation costs and can retain established part-time jobs nearer home. This is a choice driven by economic factors. I have encountered many such students during my time lecturing in Belfast (TEFS 23rd August 2019 'Students working in term-time: Commuter students and their working patterns').

However, Wyness misses this and concludes “This research suggests that there is an important role for information, advice and guidance, and university outreach programmes, to ensure that students are making informed choices”.

Predicting grades might be biased?

The DfE also used earlier data from Wyness and colleagues to focus on the accuracy of predicted grades and the likelihood of bias. This relies on some assertions cited from the work of Wyness going back to 2016 and ‘Predicted grades: Accuracy and Impact. A report for University and College Union’. That report was followed up by a highly cited and influential paper for the Sutton Trust in 2017, ‘Rules of the Game: Disadvantaged students and the university admissions process’. This considered additional factors likely to impact disadvantaged students such as the complexity of the UCAS form and the use of personal statements.

The current DfE consultation notes that the results of students from disadvantaged backgrounds are underpredicted and particularly concentrates on the likelihood of underpredicting the results of high achieving students from lower SES groups.

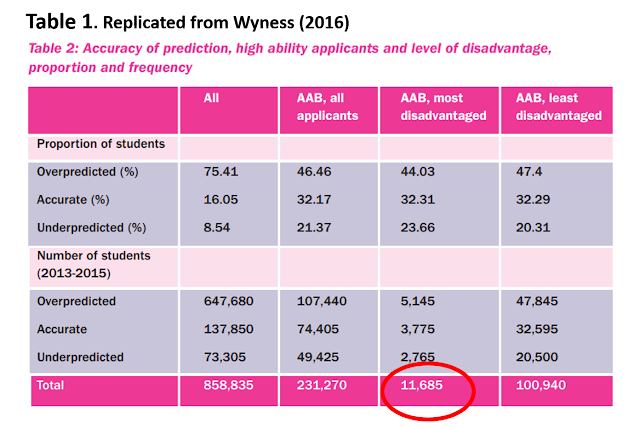

Figure 1 here is a copy of Figure 4 from Wyness (2016) and is directly replicated in Figure 3 of the consultation’s Equality Analysis to back this up.

Yet the results from the 2014/15 data in the Wyness (2016) report show that ‘over prediction’ is the biggest issue and this is more common overall for disadvantaged students. ‘Under prediction’ is seen as less of a problem “As Figure 4 shows, that those applicants from the most disadvantaged backgrounds (ie POLAR groups 1 and 2) are the least likely applicants to have their grades accurately predicted. They are also more likely to have their grades over-predicted than those from more advantaged groups.”

It is also important to note that POLAR Version 3 quintiles are used as a proxy for disadvantage (TEFS 29th April 2019 ‘POLAR whitewash fails to cover all surfaces’ for a critical overview). Also, the difference in predicted and actual grades as +/- points are “defined by UCAS as the points score attached to the highest 3 A level grades achieved by the applicant, with the following points per grade used in the calculation: A*=6, A=5, B=4, C=3, D=2, E=1”.

The fate of high ability students.

The main issue is seen as under prediction of disadvantaged students results who get grades at the higher end. This is stated in the Equality Analysis as:

"Wyness (2016) finds that high attaining (AAB or better) but disadvantaged students are more likely to have their grades under-predicted than high ability students from more advantaged backgrounds. This means that PQA is likely to have a positive impact on high attaining but disadvantaged students."

But the DfE consultation should have shown the evidence to back this up.

Wyness (2016) notes two important things.

1. “Only a small number of disadvantaged young people fall into the high-attainment category”.

2. “Among these AAB applicants, applicants from the most disadvantaged group are slightly more likely to have their grades under-predicted than those from the least disadvantaged groups”.

These conclusions are based upon Table 2 from Wyness (2016) and shown here in Table 1.

Using data from 2013 to 2015, ‘over prediction’ is shown as more common. The real scandal is that so few disadvantaged students get the higher grades as ringed in red.

Wyness also goes onto to consider other factors such as type of school and probability of applying to a higher tier university. These problems might be fixed first by the DfE instead of accepting them as inevitable.

The real problem lies with the examinations.

In his recent critique of PQA for the Higher Education Policy Institute (HEPI) (in ‘Where next for university admissions?’), Mark Corver of DataHE deploys the most recent data from 2020 and comments on predicted grades with “If you view predicted grades as an estimate of how well someone might realistically do, and recognise that exam-awarded grades themselves have random noise, then there is no accuracy reason not to use predicted grades in university admissions”. He also observes that “Teachers are often implied to be incompetent or scheming when it comes to predicted grades. The data says they do a difficult job well. Perhaps clarifying the nature of predicted grades by expanding the current single value to a likely upper and lower level of attainment would help this be more widely understood”.

In the same HEPI report, former UCAS leader, Mary Curnock-Cook makes a similar point with, “Paradoxically, these ‘unreliable’ predicted grades are highly predictable in their unreliableness. Indeed, there may well be more unfairness inherent in awarded grades which the exams’ regulator, Ofqual, has admitted are only correct to within one grade either way”.

This idea arises from the tireless efforts of Dennis Sherwood who worked in a consultancy role for Ofqual in the past. It follows an article by Sherwood on the Higher Education Policy Institute (HEPI) site in November 2020, ‘School exams don’t have to be fair – that’s official’. There he concluded “To me, exam grades should be fair, and a key aspect of ‘fairness’ is reliability. And grades that are ‘reliable to one grade either way’ can never be fair”. He has analysed in detail the lack of reliability in the A-level examination grades awarded and the facts are uncomfortable.

In a paper for the HEPI in January 2019, Sherwood also asked ‘1 school exam grade in 4 is wrong. Does this matter?’. By June of that year he followed up with, ‘Students will be given more than 1.5 million wrong GCSE, AS and A level grades this summer. Here are some potential solutions. Which do you prefer?’ . In answer he offered several solutions to rectifying the reliability problem that are not being considered.

This ‘oversight’ is was admitted by Ofqual as far back as November of 2018 in ‘Marking consistency metrics – An update’ with “The probability of receiving the definitive grade or adjacent grade is above 0.95 for all qualifications”. They have persisted with the perverse boast that that over 90% of their grades are reliable to within +/- one grade. This was reinforced by the Acting Ofqual Chief Regulator, Glenys Stacey, last September at an Education Committee hearing who noted that grades were “reliable to one grade either way”.

(TEFS reviewed the various arguments in December 2020 in a series of five related posts entitled ‘Examinations and ‘the ghost in the machine’).

How well do teachers predict grades?

It is astounding therefore that teachers are criticised by the DfE for failing to predict A-level grades ‘accurately’ when the grades themselves are only reliable to within +/- one grade to start with. Yet this is at the centre of the DfE’s reason to abolish predicting grades. But it seems logical that a teacher will tell a student with potential that they might get a good grade ,adding the advice that they must work harder. Such encouragement is part of the professional teaching process and I did this often for my students in a university for years. Many need this to acquire more confidence in their own ability. Teachers must not be condemned for being 'inaccurate' of they look objectively at the potential of a student. The fact that they seem to over predict grades is entirely expected.

But if one bears in mind that the exam grades can be out by +/- one grade most of the time, it seems teachers are trying to hit a moving target (TEFS 11th December 2020 ‘Accuracy, reliability and the ‘William Tell’ effect’). If a teacher predicts BBB grades and the student gets AAA or CCC, this is within the margin of error for the examinations and the teacher is almost as reliable as Ofqual says they are. Wyness (2016) observed that “the majority of predicted grades were within 1-2 points”. In fact, 85.6% of teacher predictions fall within one grade over three A-levels. Indeed, it might be that teachers are better at determining the potential of a student than that one-off examinations. After all, they have more data over time to go on. It appears Mark Corver is right and “they do a difficult job well”.

Mixed responses.

These are varied and some key influential stakeholder organisations released their responses just before, or just after, the consultation closed on Wednesday (13th May 2021). In the light of the submissions and the problems raised, it is astounding that they do not appear to have been approached directly in advance of the proposals.

Some were in favour of reform, with UCU in the lead by advocating Option 1 and post qualification applications ( UCU response to UCAS admissions report ). This reflects their long-standing support for PQA. In doing so, they firmly rejected ‘UCAS's conservative proposals’ and cite their own joint report in 2019 with Graeme Atherton of the National Education Opportunities Network (NEON) 'post-qualification application: a student-centred model for higher education'. In contrast the National Union of Students (NUS) repsonse is qualified and sceptical with, “The recommendations here should, if implemented fairly and with student interest front and centre, make for a much fairer admissions system”. But hoping “The wider use of contextual admissions will be vital for levelling the playing field between students from a wealth of backgrounds” may not be an achievable goal in the proposed PQA.

The UCAS proposals, ‘UCAS Response to Department for Education consultation on post-qualification admissions’, are indeed ‘conservative’ and incremental, but this might reflect greater experience in dealing with a complex operation. Their more detailed report released in April ‘Reimagining UK Admissions’ is backed up with a lot of data and evidence. UCAS leader, Clare Marchant, summed up the problems well with,

“UCAS has serious concerns about any model that would see all application and offer making activity happen after exams, and think this would lead to an increase in dropout rates, particularly in disadvantaged groups – the exact opposite of what we are trying to achieve through reform. It also runs the risk of making university offers purely about exam results, and not individuals, and isn’t inclusive of the full range of assessment techniques used, such as portfolios, auditions and interviews”.

A similar conclusion was reached by Mark Corver of Data HE in his contribution to the report for the Higher Education Policy Institute (HEPI) in March, ‘Where next for university admissions?’. ‘Over prediction’ was again highlighted alongside the potential for a negative impact on less advantaged students. Corver concludes that “Omitting predicted grades from admissions would result in a poorer matching of potential to places”. Using POLAR version 4 classifications, the outcome is convincingly stressed using more recent data from UCAS (UCAS Undergraduate sector-level end of cycle data resources 2020). As an aside, it is odd that the DfE consultation did not refer to the recent data in 2020 that is openly available.

In the same HEPI report, Viki Bolivar of Durham University looks to earlier work from January 2021 for the Nuffield Foundation, Fair admission to universities in England: improving policy & practice’ and examines the university approaches to contextual admissions and how they might be improved. Something that PQA will make difficult in practice. The former CEO of UCAS, Mary Curnock-Cook, also reflects upon earlier reviews of PQA and observes that PQA “might damage the prospects of more students than it improves”. These are warnings from those of considerable experience and must not be ignored.

TEFS response and the Universities UK verdict.

TEFS submitted a response that proposed Option 2 as the ‘least bad’ option and concurred with UCAS, Corver and Curnock-Cook that the reforms could make matters worse. In doing so, the weight of experience shown in the UCAS response was considered along with the proposals made by UUK last year.

The idea that university admission administrations could make offers and accept students in a window of a few weeks is clearly not realistic. Trying to automate it using ‘algorithms’ would be chaotic. Even moving the A-level examination dates forward by a few weeks would hardly compensate for the effort required or mitigate the chaos that would ensue. TEFS concluded that universities would need students to make earlier applications and register their interest in the preceding months. The role of a student statement is very important information that should be improved upon and not abolished. However, much more advice would be needed from schools at this point and the danger lay in teachers still offering their advice on applications based on predicting the grades.

The most influential response to the consultation was undoubtedly from Universities UK (UUK). Their accompanying press release ‘Admissions reform must not leave students marooned without proper careers support’ summarised the position well in “Reform to the university admissions system should only take place if there is a commitment from the UK government to enhance investment in careers advice for applicants to boost student choice”. Like TEFS, they dismiss Option 1 as unrealistic and “worse than the present system”. The six-week timeframe would be “an unacceptably small window for universities and students to undertake fundamental components of the admissions process” and “potentially disrupt universities’ progress in promoting social mobility and improving opportunities for the most disadvantaged in society”. Something that also concerned the NUS.

This response follows from the recommendations made in UUK's Fair Admissions Review in November of last year. However, by accepting the idea of some form of PQA and the elimination of conditional, unconditional offers, they trapped themselves and it would take at least three years to implement. There is acceptance that there might be some reform of the so-called Schwartz principles that should be revisited (Admissions to Higher Education Steering Group 2004 ‘Fair admissions to higher education: recommendations for good practice’). Interestingly UUK declined to comment on the current admissions system.

In a very qualified favouring of Option 2, UUK offered many caveats that will test the PQA policy at its core. Their concerns were set out as eight tests to be passed for it to “truly result in a fairer system”.

The UUK Caveats.

The tests include five that closely match the TEFS submission. These are:

- Enhanced government investment in careers advice in the form of targeted, structured information, advice and guidance (IAG) before, during and after the application process.

- Guidance on what will replace formal, predicted grades, supported by evidence on the applicability and reliability of different aspects of prior attainment.

- Sufficient time in the cycle to prevent any disruption to widening access and contextual offer making strategies, and the early release of Free School Meals data to enhance these processes.

- Allowing around 5 choices for applicants in order not to limit disadvantaged student choice and levels of aspiration.

- Reforming the personal statement part of the application process so that it is better structured, made shorter and more direct, and accompanied by clear guidelines to acknowledge individuals' mitigating/extenuating circumstances.

It seems that experience in running a university, or running an admissions service, will trump the policy of starting a radical PQA process put forward by the DfE and Gavin Williamson. This brings back memories of the examinations debacle a year ago when instructions from Williamson to Ofqual turned out to be fatally flawed. He demanded maintaining standards as the primary objective and fairness was side-lined. He asked Ofqual to do the impossible and the resulting mess led to Ofqual taking the blame. If PQA goes ahead without proper thought, a bigger mess is likely to result. In the meantime, reforming the qualifications platform for admission, and better advice for all students, would yield better results in terms of fairness (TEFS 15th January 2021 ‘A radical overhaul of examinations is needed as soon as possible’). With deep reservations evident from UCAS, and their university masters represented by UUK, it looks like there will be no radical PQA change. It’s about time the DfE looked at wider structural problems that impact less advantaged students.

Mike Larkin, retired from Queen's University Belfast after 37 years teaching Microbiology, Biochemistry and Genetics. He has served on the Senate and Finance and Planning committee of a Russell Group University.

Comments

Post a Comment

Constructive comments please.

Did you have a free Higher Education?